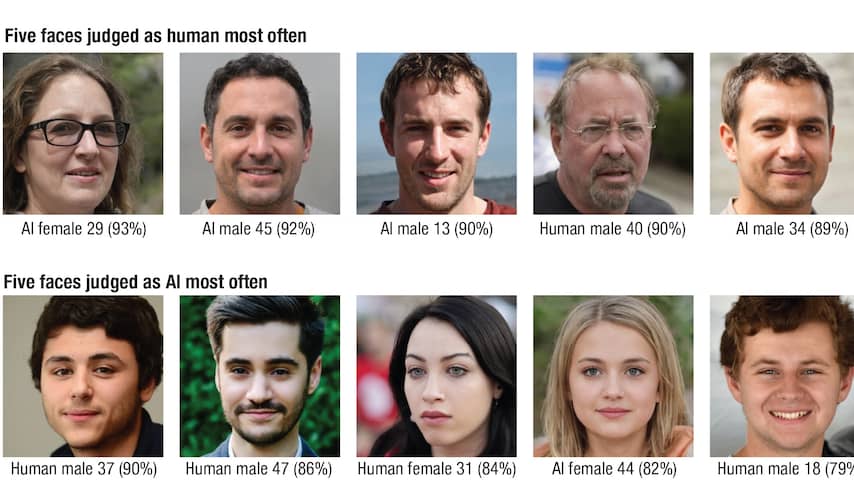

It is no longer possible to distinguish between AI images and real ones. People now rate fake faces as more real than actual human images. This development poses problems: soon you won’t be able to believe your eyes.

Mensen beschouwen gezichten van witte mensen die zijn gemaakt door een computer inmiddels als realistischer dan echt bestaande gezichten. Dat schrijven Australische, Britse en Nederlandse onderzoekers in een recent gepubliceerd onderzoek.

Testpersonen konden niet zonder twijfel aangeven welke gezichten die ze voorgeschoteld kregen van echte mensen waren en welke nep. Sterker nog, ze wezen door AI gemaakte plaatjes vaker aan als menselijk.

“AI-gezichten van witte mensen zijn meer in verhouding dan menselijke gezichten”, zegt de Australische onderzoeker Amy Dawel. “Maar mensen verwachten juist dat menselijke gezichten meer in proportie zijn. Daardoor worden AI-gezichten vaak als realistischer gezien.”

Voor het onderzoek werd een AI-plaatjesgenerator gebruikt die was getraind op 70.000 gezichten. Op basis daarvan kan de computer portretten van nieuwe, niet-bestaande gezichten maken.

Net als bij veel AI-modellen is het lastig zeggen hoe de resultaten tot stand komen, zegt Dawel. “Wel weten we dat er grotendeels met witte gezichten is getraind. Dat kan verklaren waarom deze nepgezichten als realistischer worden ervaren dan gegenereerde gezichten van mensen van kleur.”

Six fingers and weird reflexes are a thing of the past

Over the past year, AI images have become increasingly realistic. For many people, this realization came when images circulated on social media of the Pope wearing a puffer jacket and of the alleged arrests of Boris Johnson and Donald Trump. Those pictures were all fake.

It is becoming increasingly difficult to distinguish between fake and real products. Dutch company DuckDuckGoose (not to be confused with the search engine DuckDuckGo) is working on AI-powered detection software to identify fake images. “We can still often see the difference between the real and the fake,” says DuckDuckGoose’s Mark Evenblige. “But we’ve been working on this forty hours a week for three years.”

This is probably more difficult for the average person. In the future, Evenblig may not be able to recognize AI images. Six months ago, fake photos sometimes clearly showed that someone had six fingers or that the reflections in the eyes were incorrect. But artificial intelligence is increasingly working to remove these types of defects.

As humans we expect everything to be okay. This is why we are more likely to believe AI images. “AI images are so cool, that’s why people think: These images must be real,” says Evenblige. “But reality contains a certain amount of chaos.”

At the mercy of machine eyes

His colleague Baria Lutfi of DuckDuckGoose says there comes a point where people can’t tell the difference between fake and real. “But machines can do it,” she says. “Neural networks can recognize basic patterns and detect anomalies.”

These deviations are a kind of fingerprints that artificial intelligence leaves behind. Just like in real life, you often can’t see them with the naked eye, but they can be made somewhat visible using aids. “We hope that social media companies and news media will use this type of detection program,” Lotfy says. For example, images could be labeled with a label indicating whether the image is real or generated by artificial intelligence.

This looks as if the computer will soon determine whether the photo is real or not. But we shouldn’t look at it that way, Lutfi says. “If an antivirus program on your computer announces that a virus has been found, you are probably deleting the file without knowing how that antivirus program works.”

Experts say detection tools are thus becoming helpful tools, but human interpretation remains important. “If the software indicates that an image is fake, it should be able to explain why,” says Evenblige. “So we humans can check it again.”

Ontvang meldingen bij belangrijke ontwikkelingen

Also coming soon are realistic AI videos

In the near future, not only images will become more realistic. It is now actually possible to imitate realistic sounds based on short audio fragments. AI videos are also improving rapidly. For now, it still looks like a mediocre cartoon, but that may change in a few months.

“Then you will no longer see a fake photo of Boris Johnson being arrested,” says Evenblig. “But if you had a one-minute video that looked realistic, with the sound of knocking and the effects of flashing lights, you could see Johnson being pulled into the police car.”

“Web maven. Infuriatingly humble beer geek. Bacon fanatic. Typical creator. Music expert.”